This document describes general aspects of the source tree and test strategies and also starting in chapter Get sources, build and test the practial approach.

Github:

-

https://github.com/JzHartmut/src_emC sources emC to use in other projects

-

https://github.com/JzHartmut/Test_emC test sources, test environment

Hint: Use only necessary source files in other project per copy & compare.

1. Directory-tree for sources, test sources and test environment

See also vishia/SwEng/html/srcFileTree.html : Common file tree structure for sources, build and IDE.

The source tree is organized a little bit similar due to gradle or maven guidelines. There are two aspects:

-

The src tree should contain only sources and no temporary generations. It should be compact and concise.

-

The principle from maven: "convention for configuration" is proper to get an orientation. Configuration is not so hard, but convention for orientation for the user is necessary.

Similar to the guidelines for arrangement of files in maven or gradle, a directory tree has the following structure:

./ working tree +-.git/* ... git archive in the working tree (not recommended) +-.git ... alternatively .git file as link to the repository +-src-back ... space for local copies, zips, (working also (temporary) without repository) +-build.sh ... the basic build file for tests +-README.md ... info +-tools/* ... not in .git, loaded from internet +-build/* ... not in .git, build output (maybe redirected to RAM disk) +-result/* ... contains some results to compare +-IDE ... not in .git, see src/test/IDE, working dirs for IDEs are separated from sources, | +-MSVC ... IDE files form Visual Studio Test | +-EclCDT ... some Test projects in Eclipse | +-TI_TMS320 ... more IDE projects for other test platforms | +-src +-src_emC ... the sources of the module, without test (adquate to 'main' by maven) | +-.git | +-.gitRepository | +-cpp ... they are C++ and C sources | | +-emC ... tree of common emC sources | | +-emC_srcOSALspec ... specific sources | +-make ... some make files, not for test, only for the sources +-test ... all for test | +-cpp ... they are C++ and C sources | | +-emC_TestXYZ ... sources for test of special topics | | +-emC_TestAll | | +-testmain.cpp ... test organisation for test of all | | | +-testScripts ... Some scripts for special tests to start | +-ZmakeGcc ... Test scripts in jzTxtCmd for whole test with gcc | +-ZmakeGcc.jztsh +-IDE/* ... The source of the IDE configuration files +-docs ... place for documentation | +-asciidoc ... in Asciidoc +-load_tools ... scripts to load the tools from internet

The sources, able to commit and compare with git are contained only in the ./src/ tree.

The git repository for the whole source tree contains also the test ./results/, and a few script files on top of the working tree. Possible that some ./IDE/* configuration files are member off, but not temporaries.

The ./build are really temporaries in the working tree.

The ./tools can be loaded from internet, or also exisiting as linked folder for sereral working tree with the same tools.

1.1. tools

Tools (a few jar files) are able to load from internet, see first vishia/SwEng/html/srcFileTree.html: chapter Libs and tools on the source tree.

The git archive Test_emC/.git does not contain the tools files, instead they should be gotten from internet. But it contains all information to load this files:

-

./src/load_tools/loadTools.sh: A script to load -

./src/load_tools/tools.bom: A list which tools are necessary, version and source -

./src/load_tools/vishiaMinisys.jar: A Java program to organize loading tools.

Wget as known linux cmd is not available unfortunately in a standard MinGW

installation, neither it is anyway a standard on any Linux System.

Hence it is provided with the minisys_vishia.jar for all systems where Java runs. But minisys_vishia.jar does more.

The GetWebfile works with a bom, a bill of material, see articel in german: Jeff Luszcz "Risiken bei Open-Source-Software: Warum eine Bill-of-Materials sinnvoll ist"

java -cp vishiaMinisys.jar org.vishia.minisys.GetWebfile @tools.bom tools/

The tools.bom contains the re-check of the vishiaMinisys.jar, and check and download of vishiaBase.jar and vishiaGui.jar. The bom contains MD5 checksums. With it the already existing vishiaMinisys.jar is checked whether the checksum is okay. It it is not so, a warning is output. The other files are loaded and checked (whether the download is correct). If there are existing (on repeated call), the MD5 checksum is build and compared. The MD5 checksum is noted in this archive. Hence it is not possible (with the safety of MD5) to violate the files all on server, download process and on the own PC.

The next importance is: It is documented which files are used from where. Other systems loads some downloaded stuff in a home directory (C:\Users... on Windows), not simple obviously which and from where. And the third importance is: The sources of this jar files are stored beside the jar file at the server. The jar files can be build reproducible (see https://www.vishia.org/Java/html5/source+build/reproducibleJar.html).

-

The

tools/vishiaBase.jaris a Java executable archive (class files) with about 1.2 MByte, which contains especially the JZtxtcmd script interpreter. That is used to generate the test scripts and for Reflection generation (further usage of sources). It is a necessary component. This file is downloaded from a given URL in internet. If necessary you can find the sources to this jar file beside the jar file in the same remote directory. With the sources you can step debugging the tools for example using the Eclipse IDE https://www.eclipse.org. -

The

tools/vishiaGui.jaras Java archive contains the ability to execute theSimSelectGUI which is used insrc/test/ZmakeGcc/All_Test/test_Selection.jzT.cmdto build and executed specific test cases. It also contains some other classes for example for the 'inspector' or the 'The.file.Commander'

1.2. build

The build directory is only temporary. It is recommended to redirect it to a RAM disk, which may be the location

of the $TMP directory in windows or the /tmp/ in Linux.

Advantage of a RAM-disk: It is faster, your hard disk (SSD) is spared.

for that the

./+clean_mkLinkBuild.bat or ~`sh`

for Windows and Linux can be used to create a linked directory ./build redirected to the tmp folder.

./+clean.bat or ~`sh`

can be used to clean the situation with the build, for a newly build process or to finish the work.

It cleans also the directory in the TMP / tmp area,

not only removes the build link (important for carefully using the disk space).

The working tree should be free of some temporary or resulting files. It should contain only sources. That gives the possibility to build a 'file copy' in form of a zip file for example, with compressed content. This is another proper possibility to save a safety version than git or another possibility for share sources.

On the other hand, all stuff should be done in the only one working tree

without complex external file path settings.

With the 'gradle' file tree concept the building results

are stored in the build directory. Now it is possible to really store the content inside the tmp directory

(on linux per default /tmp) using a link for the build sub directory.

1.2.1. Linked directories for build

The following linked directory are created from +Clean_mkLinkBuild.bat:

Working_tree +- build --> $TMP/Test_emC/build

-

adequate in Linux for

+mkLinkBuild.sh. This scripts checks whetherbuildexist (independent) and cleans and creates the temporary directories$TMP/…. It means,

On starting build.sh it is checked whether the build directory exists, as link or immediately. Only if it does not exist, +mkLinkBuild. is invoked to create the link and clean inside the temporary location. A repeated call of build. does not delete anywhat, it is a repeated build maybe with changed sources.

All directories which contains IDE files (here especially src/test/MSVC/All_Test) should store temporary content in a linked temp directory too. Usual the output directories are beside the IDE files. In this folders usual a file like +clean_mklink_builds.bat (in this case only for windows for the MS-Visual Studio IDE) cleans and creates in an adequate way. Firstly before opening the IDE this file should be clicked in its current directory.

+cleanALl.bat +cleanAll.sh

from the root cleans all links and temporaries, it should be invoked before zipping. For commiting to git this locations are (should be) excluded by .gitignore.

The possibility of symbolic linked directories is given under Unix since 1970 with

ln -s path/to/dst build

For Windows it is also possible, since "Windows Vista" but not so far public.

The adequate command mklink /D … needs unfortunately administrator rights,

it is really not able to handle. But the soft form

mklink /J build path\to\dst

runs easy. It is a really symbolic link. It is not obvious

why both mklink /D with administrator rights and the soft form mklink /J

are differentiated. Unfortunately the Java build-in variant

java.nio.files.Files.createSymbolicLink(link, target);

invokes the administrator safeguarded variant inside the MS-Windows operation system API call, hence it is not proper to use.

Because of that the creation of directory links are programmed twice,

with the given .bat and .sh scripts.

1.2.2. Using a RAM disk

A RAM disk has the benefit that the access is faster, and especially a SSD hard disk will be spared. The content on the build is only temporary necessary.

Results of build should be anyway copied to a distribution.

So the RAM disk is the ideal solution to store built files. The content of the RAM disk should not be kept after should down of the PC.

All temporaries can be stored on this non permanent medium, inclusively some windows stuff. Hence the TMP environment variable of the MS-Windows System can be redirected to the RAM disk. (Using System control, Enhanced system settings). The linked destinations uses $TMP, hence the RAM disk if TMP refer it, or any other temporary directory.

1.3. result

This ./results directory contains the last reports and generation results for comparison and study.

It does not contain libraries.

Libraries depends on the platform, used compiler etc. They are not provided here.

1.4. IDE

The ./IDE directory contains IDE = Integrated Development Environments in sub directories

for some test environments, for example Visusal Studio, Eclipse CDT but also for some embedded platforms.

An elab0rately explaination is given in

vishia/SwEng/html/srcFileTree.html chapter Directories for the IDE beside src.

Whether the IDE configuration files are part of the git for Test-EmC or specific gits or mirrored in ./src/IDE*,

it is specifically regulated.

1.5. Git repositories

They are two git repositories present:

The git archives are hosted under github/JzHartmut.

-

The archive Test_emC includes the test environment and docu in asciidoc.

-

The archive src_emC is a sub archive inside the Test_emC. It is not a sub git archive, because it has its own authority.

See chapter Get sources, build and test how to get and use.

1.6. The time stamps of all files

Git does not store the time stamps of the files. The reason for that may be that a make system needs new time stamps to make. This topic is discussed conflicting in internet. They are better make systems than the classic C/Unix maker with only check newer time stamps to decide whether to build or not. A better make system saves and re-uses a hash of the files to detect whether they are changed.

The time stamps may be a point of interesting to find out when a file was changed. This can be essentially in developing. Especially if you compare file trees, it is very helpfully to see the original time stamps. If you have a specified version, all original files should have a time stamp oder than the version’s date. If they have a time stamp of the copying or clone date, you may worse decide which files are changed by yourself (with newer time stamp) and which were the originals, because the date of clone or copying may be in the same time range as your change. For example you work on your project, and in the mean time you update a repository.

The time stamp in Unix (before Linux) and also in Linux have another approach as usual in Windows. DOS and Windows have always preserv the time stamp on copying, which is better to see whats happen. In contrast, on Unix the principle of make was always present (Unix tools were often delivered only as sources for different machines). The standard Unix maker uses the time stamp, more exact the comparison of the newer time stamp of a source against the older time stamp of the compilation result. Thats why in Unix the copy or creation of a file has had created anyway a new time stamp.

Because the emC test uses a better maker than the standard on Linux/Unix the files does not need a newer time stamp to decide for compilation. Instead a comparison with a list is done. That’s why it is possible, and should be done, to adjust the time stamps of the files after cloning or getting a zip from github.

For that reason a file

./.filelist

is always part of the files in the repository. This file contains the time stamps, and also the length and a CRC check

of all files. The list is/should be updated with the current time stamp before commit.

This is automatically done using the vishiaGitGui.html.

A manual update can be invoked by calling the +buildTimestamps.sh found on the root of the file tree:

The +buildTimestamps.sh script looks whether it finds the .\tools (proper in the working tree),

and if not it calls ~/cmds/javavishia.sh to execute Java with the vishia jar files.

Test_emCecho build the timestamp in .filelist from all files:

Filemask="[[result/**|src/[IDE*|buildScripts|cmds|docs|load_tools|test]/**]/[~#*|~*#]|~_*]"

currdir="$(dirname $0)"

if test -f $currdir/tools/vishiaBase.jar; then

java -cp $currdir/tools/vishiaBase.jar org.vishia.util.FileList L -l:$currdir/.filelist -d:$currdir "-m:$Filemask"

else

~/cmds/javavishia.sh org.vishia.util.FileList L -l:$currdir/.filelist -d:$currdir "-m:$Filemask"

fiThe option -m: (mask) selects dedicated files which are member of the list.

An elaborately description of this mask argument can be found in

org.vishia.util.FilepathFilterM

(used inside org.vishia.util.FileList).

Here it means that the directories [result/**|src/...] are only candidats to add to the list,

but also all files on root level with ~_*. It means all files but not files starting with underliner.

In the src directory only src/[IDE*|buildScripts|cmds|docs|load_tools|test] are regarded.

The other directories inside src have their own .fileList and git repository.

Inside this src/… directories /** means that all sub directories are listed,

and for the file level [~#*|~*#]. That exclude files starting or ending with #.

A more simple mask is given for the pure source archive:

src_emCecho build the timestamp in .filelist from all files:

Filemask="[**/[~#*|~*#]|~_*]"

currdir="$(dirname $0)"

if test -f $currdir/../../tools/vishiaBase.jar; then

java -cp $currdir/../../tools/vishiaBase.jar org.vishia.util.FileList L -l:$currdir/.filelist -d:$currdir "-m:$Filemask"

else

~/cmds/javavishia.sh org.vishia.util.FileList L -l:$currdir.filelist -d:$currdir "-m:$Filemask"

fiThis more simple mask means for directories [**/[~#*|~*#] all deep levels, files without starting and ending #

and for the file level the same as above: ~_*.

The ~ is a negate (exclude) operator. * is the known wildcard symbol in file path masks.

A choice of files is written with [..|..] on start sequence (not after a *),

also possible nested and with wild cards.

That`s all.

Refer to org.vishia.util.FileList for detail documentation of this tool.

The mask should be follow the content of the git archive. That is not identically in any case.

git uses the .gitignore file for an adequate approach.

Files which are not included in the .filelist but in the git archive does not get the proper time stamp,

not a problem if it is a few files.

Files which are included in the .filelist but are not existing while touching are reported on console.

May be they are missing in a git archive, detected while restoring (checkout, update, clone).

That is also not a meaningful problem. But the mask and the git content should be tuned as well as possible.

As you see in the script line here the approach described in chapter Linux and Window: jar files and some scripts in /home/usr/cmds or ~/cmds and ~/vishia/tools are used here.

This command should be able to invoke in all situations, just also on the mirror files with .git archive,

independent of ./tools in the working tree (chapter tools).

To restore the timestamps execute +adjustTimestamps.sh:

This script looks whether it finds the \tools (proper in the working tree),

and if not it calls ~/cmds/javavishia.sh to execute Java with the vishia jar files.

src_emCecho touch all files with the timestamp in emC.filelist:

currdir="$(dirname $0)"

if test -f $currdir/../../tools/vishiaBase.jar; then

java -cp $currdir/../../tools/vishiaBase.jar org.vishia.util.FileList T -l:$currdir/.filelist -d:$currdir

else

~/cmds/javavishia.sh org.vishia.util.FileList T -l:$currdir/.filelist -d:$currdir

fiOn restoring a time stamp it is tested whether the file is unchanged. Only then the time stamp is restored, of course. A changed state of a file is detected primary by its length (fast operation). If the length is identically, the CRC code is built with the content. Only if the CRC is the same, the time stamp is applied. There is a very small gap that a changed file has the same length and the same CRC.

Refer to org.vishia.util.FileList for detail documentation of this tool.

2. Infrastructure on the PC for Test_emC

The emC Software should be tested on PC, either with Windows (Win-10 recommended) or with Linux. For Compilation of the test files gcc (GNU Compiler Suite) is used, which is available under Windows with MinGW or Cygwin.

Especially for Windows usage, a Visual Studio Projects is part of

IDE/MSVC/AllTest_emC_Base.sln

This projects can be used individually, opening the adequate *.sln file.

The emC software is for embedded, but the concept includes test of algorithm in originally C / C++ on PC platform.

2.1. Windows or Linux

Both systems should be able to use. On Windows MS Visual Studio is supported. For Windows and Linux Eclipse-CDT is used. For test gcc is used on both systems.

Important: Case sensitivity filenames

Filenames on Windows are used non-case sensitive. It means errors in writing upper/lower case are not detected. But on Linux file names are case sensitive. Windows supports and saves the case of letters, but doesn’t evaluate it.

Rule: All file names should be used as case sensitiv with the proper case. The case of the filename in the git repository is determining. All #inlcude <…> lines should be proper for case sensitivity.

It means a test under Linux is necessary to detect errors in faulty case of filenames.

It was seen that a faulty case writing of files in the MS Visual Studio project files forces a faulty case while editing and saving the file. Other tools respects the case on the file system. Hence also the case in the MS VS project files should be proper.

Important: Line end \n

All files in emC should have the line ending \n as in Unix/Linux. This is possible also in Windows. All Windows programs accept a simple \n (not only \r\n) as line ending, except the standard-Windows Notepad. But that program is usual not necessary to use.

The git rule for line ending should be 'store as is, get as is'. It means the line ending \n is stored also in the emC git repositories.

An conversion to \r\n only because 'we have here Windows' as usual in the mainstream is contra productive and unnecessary.

If some files have \r\n als line ending, it is a mistake! It is not seen in some editors.

MS-Visual Studio supports especially working with \n as line ending. This is done by the file

IDE/MSVC/.editorconfig

See comments there. This file is definitely detected by MS-Visual Studio and used.

Important: Using slash in include paths and all other paths

All [cp} compilers also in Windows accepts the slash / in the include paths. Only specific Windows programs (MS-Visual Studio internally) uses the backslash \ internally.

Actually some Windows programs accepts the slash meanwhile.

Because the scripts to compile etc are shell scripts, the slash is used there too. JZtxtmcd scripts uses the slash. All Java file paths accepts the slash also in Windows.

Don’t using Tabs

Tabs in texts are a bad decision because some editors uses different tab widhts and the text is picked apart. It is not really necessary to decide the 'own' hability for indentations. The emC sources uses an identation of two characters per level. It is enough to be obviously, and the indentation can be typed simple manually if necessary. If 4 characters are used for indentation (often), it is necessary to count while typing - an unnecessary effort.

Tabs inside a text are often not visible, and it is confusing if the cursor moves to unexpected positions while typing the arrow keys.

Automatically remove trailing white spaces is a good decision.

2.2. Cygwin or MinGW

On Windows MinGW, Cygwin or adequate should be installed to support sh.exe for unix shell scripts and to offer the Gnu Compiler suite (gcc). It should be familiar for persons which uses gcc etc.

I have had originally oriented to MinGW, it has run with gcc, but it seems to haven’t support for pthread. All exclusively my src_emC/emC_srcOSALspec/os_LinuxGcc/os_thread.c has worked. Then I have changed to Cygwin, used it, also test under origninal Linux, all runs well. I have not time yet for MinGW. The content regarding MINGW is shifted to the last chapter, maybe helpfull or not.

2.3. Git available

Git should be access-able via command line

git ....

It is available often, elsewhere it should be installed from git-scm. The git should be installed under MS-Windows to

C:\Program Files\git

Elsewhere it does not run probably. Git contains a git/mingw64 part too. Both bin directories should added to the system PATH (may be correctly done by installation). The mingw inside git does not contain any compiler, but usual the same linux commands inclusively sh.exe.

Any git graphical environment can be installed, for example tortoise-git, but it is not presumed for working with emC sources. It is presumed for git actions.

On a Windows PC I have installed an ordinary git.

c:\Program Files\git

<DIR> bin

<DIR> cmd

<DIR> dev

<DIR> etc

<DIR> mingw64

<DIR> usr

152.112 git-bash.exe

151.600 git-cmd.exe

18.765 LICENSE.txt

160.771 ReleaseNotes.html

The system PATH was enhanced by this installation and contains:

PATH=...;c:\Program Files\git\mingw32\bin;c:\Program Files\git\bin;c:\Program Files\git\usr\bin;...

It means git and also the mingw32 stuff delivered with git is in the PATH. But mingw32 does not contain the gcc compiler suite.

Hence I have install Cygwin on PC, cygwin.org. select proper tools, and gotten:

c:\Programs\Cygwin

<DIR> bin

<DIR> dev

<DIR> etc

<DIR> home

<DIR> lib

<DIR> sbin

<DIR> tmp

<DIR> usr

<DIR> var

53.342 Cygwin-Terminal.ico

66 Cygwin.bat

157.097 Cygwin.ico

It is not installed to the Windows standard folder but in an own directory tree. It is not included in the system’s PATH.

2.4. For MS-Windows: Asscociate extension .sh to unix-script.bat

To simple execute unix (linux) shell scripts with the extension .sh

you can write a batch file unix-script.bat , able to found in the PATH.

Templates of this files are stored in src/load_tools/windows_shellscriptBatches_template/

You should copy this files in a directory included in the system’s PATH (on my computer in C:\Programs\Batch\*

and associate the extension .sh to unix-script.bat

::git-script.bat %1 %2

::mingw-script.bat %1 %2

cygwin-script.bat %1 %2Here you can simple change by comment lines, whether one of the following scripts is executed.

@echo off

REM often used in shell scripts, set it:

set JAVAC_HOME=C:/Programs/Java/jdk1.8.0_241

set JAVA_HOME=C:/Programs/Java/jre1.8.0_241

set PATH=C:\Program Files\git\mingw64\bin;%JAVA_HOME%\bin;%PATH%

::set PATH=%JAVA_HOME%\bin;%PATH%

REM sh.exe needs an home directory:

set HOMEPATH=\vishia\HOME

set HOMEDRIVE=D:

REM possible other working dir

if not "" == "%2" cd "%2"

REM -x to output the command as they are executed.

REM %1 contains the whole path, with backslash, sh.exe needs slash

REM Preparation of the scriptpath, change backslash to slash,

set SCRIPTPATHB=%1

set "SCRIPTPATH=%SCRIPTPATHB:\=/%"

echo %SCRIPTPATH%

echo on

"C:\Program Files\git\bin\sh.exe" -c "%SCRIPTPATH%"

REM to view problems let it open till key pressed.

pause@echo off

if not "%1" == "" goto :start

echo Start of cygwin bash.exe maybe with shell script

echo -

echo cygwin_script.bat PATH_TO_SCRIPT [WORKING_DIR]

echo -

echo * PATH_TO_SCRIPT like given in windows on double click, absolute with backslash

echo or relative from WORKING_DIR if given, may be also with slash

echo * WORKING_DIR optional, if given Windows-like with backslash (!)

echo else current dir is the working dir.

echo * Adapt inner content to setup where Java, MinGW or Cygwin is able to find

echo and where the home is located!

:start

echo PATH_TO_SCRIPT=%1

echo WORKING_DIR=%2

REM sh.exe needs an home directory:

set HOMEPATH=\vishia\HOME

set HOMEDRIVE=D:

set Cygwin_HOME=c:\Programs\Cygwin

set PATH=%JAVA_HOME%\bin;%JAVAC_HOME%\bin;%PATH%

set PATH=%Cygwin_HOME%\bin;%PATH%

::PATH

echo bash from Cygwin used: %Cygwin_HOME%

where bash.exe

REM often used in shell scripts, set it:

set JAVAC_HOME=C:\Programs\Java\jdk1.8.0_241

set JAVA_HOME=C:\Programs\Java\jre1.8.0_291

echo "JAVAC_HOME=>>%JAVAC_HOME%<<"

REM Settings for home in Unix:

REM possible other working dir

if not "" == "%2" cd "%2"

REM Preparation of the scriptpath, change backslash to slash,

set SCRIPTPATHB=%1

set "SCRIPTPATH=%SCRIPTPATHB:\=/%"

echo Scriptpath = %SCRIPTPATH%

REM comment it to use mingw, execute to use cygwin

echo current dir: %CD%

echo on

bash.exe -c %SCRIPTPATH%

echo off

REM to view problems let it open till key pressed.

pauseThe major difference between git-script.bat and cygwin-script.bat is:

The last one sets the PATH to the compiler, which are not contained in the git shell installation.

See next chapter.

Some details:

set "SCRIPTPATH=%SCRIPTPATHB:\=/%"

converts the backslash (given on double click in calling argument) to the necessary slash.

The HOMEPATH and HOMEDRIVE variables sets the home directory which is known in Unix/Linux. So you can execute Unix/linux shell scripts nearly usual as in the originals.

aption of the operation system access to Windows).

This script sets also the PATH to the desired Java version. The operation system can use per default also a maybe other Java version, Java16 or such, but the Java parts are yet tested with Java8 only (2021-04). See next chapters.

Important:

Because git comes with some stuff of mingw32, it may be confusing with the used Cygwin. Hence the PATH refers to Cygwin should be firstly seen in the PATH variable. It may be possible to remove the c:\Program Files\git\mingw32\bin entry from git, but then some git stuff may nor run outside of this test environment. If the PATH contains Cygwin firstly, all executable available for Cygwin should be find firstly and used. If you add Cygwin at last in the PATH, some executables from git mingw are used in concurrence to Cygwin, and that gives a version mismatch.

If you want to use pure git independent of Cygwin (for other approaches) you may have another batch to include only git in the PATH (I have an adequate git-script.bat file) or you may inserted the PATH to git in the system installation.

2.5. Linux and Window: jar files and some scripts in /home/usr/cmds or ~/cmds and ~/vishia/tools

tools

The jar files which are necessary for some operations to get the sources etc.

are the same as described in sub chapter tools in the first chapter (directory tree).

It may be recommended to store this jar files on a central position either in the own home directory in linux

or in a central directory in /usr/vishia/tools/ for all user on a linux system.

For windows it may be stored in C:\Programs\vishia\tools\.

Then you can make symbolic links to this location, and you have anyway

the same jar files with its sources present ready for use independent of your concretely work.

You can use the

-

./src/load_tools/loadTools.sh: A script to load -

./src/load_tools/tools.bom: A list which tools are necessary, version and source -

./src/load_tools/vishiaMinisys.jar: A Java program to organize loading tools.

from the Test_emC zip file or clone from git to get this jar files.

For the following explanation it is presumed that this central directory for the tools is

~/vishia/tools/*

whereby ~/ is the home directory in Linux, and also in Windows on a proper location for execution of shell-scripts.

After downloading the tools (or copying from a zip) you find there:

hartmut@hartmut67:~/vishia/tools$ ls -all total 11308 drwxr-xr-x 2 hartmut hartmut 4096 Jan 23 05:28 . drwxr-xr-x 5 hartmut hartmut 4096 Jan 23 09:10 .. -rwxr-xr-x 1 hartmut hartmut 531 Nov 18 2021 +load.sh -rw-r--r-- 1 hartmut hartmut 2168046 Jan 23 05:28 org.eclipse.swt.linux.x86_64.jar -rw-r--r-- 1 hartmut hartmut 1919665 Jan 23 05:28 org.eclipse.swt.linux.x86_64-sources.jar -rw-r--r-- 1 hartmut hartmut 2047863 Jan 23 05:28 org.eclipse.swt.win32.win32.x86_64-3.116.100.source.jar -rw-r--r-- 1 hartmut hartmut 2481009 Jan 23 05:28 org.eclipse.swt.win32.x86_64.jar -rwxr-xr-x 1 hartmut hartmut 1914 Jan 23 05:25 tools.bom -rw-r--r-- 1 hartmut hartmut 1407166 Jan 23 05:28 vishiaBase.jar -rw-r--r-- 1 hartmut hartmut 1130186 Jan 23 05:28 vishiaGui.jar -rwxr-xr-x 1 hartmut hartmut 89242 Jan 23 05:01 vishiaMinisys.jar -rw-r--r-- 1 hartmut hartmut 307302 Jan 23 05:28 vishiaVhdlConv.jar

Yous see here in this snapshot on a SSH shell to a Linux computer that also the sources as jar are presented here.

The name of the jar files are equalized to canonical names. The real names with version are contained in the tools.bom,

as snapshot:

#Format: filename.jar@URL ?!MD5=checksum

#The minisys is part of the git archive because it is need to load the other jars, MD5 check

vishiaMinisys.jar@https://www.vishia.org/Java/deploy/vishiaMinisys-2022-10-21.jar ?!MD5=8241354a93291ea18889f3ca5ec19a74;

#It is need for the organization of the generation.

vishiaBase.jar@https://www.vishia.org/Java/deploy/vishiaBase-2022-10-21.jar ?!MD5=c2c2ae29bade042ab060a247e213d64d;

#It is need for the GUI to select test cases.

vishiaGui.jar@https://www.vishia.org/Java/deploy/vishiaGui-2022-02-03.jar ?!MD5=0759d5580386b53b3be4f66e94cbd3ae;

#Eclipse-SWT als Graphic layer adaption from Java to Windows-64

org.eclipse.swt.win32.x86_64.jar@https://repo1.maven.org/maven2/org/eclipse/platform/org.eclipse.swt.win32.win32.x86_64/3.116.100/org.eclipse.swt.win32.win32.x86_64-3.116.100.jar ?!MD5=e51875310d448d39e7bf1d5faf268ce3

org.eclipse.swt.linux.x86_64.jar@https://repo1.maven.org/maven2/org/eclipse/platform/org.eclipse.swt.gtk.linux.x86_64/3.122.0/org.eclipse.swt.gtk.linux.x86_64-3.122.0.jar ?!MD5=d201d62e8f6c73022649f15ce60580ee

##Special tool for Java2Vhdl

vishiaVhdlConv.jar@https://www.vishia.org/Java/deploy/vishiaVhdlConv-2022-10-21.jar ?!MD5=b01ad5f9e0bc93896328d051eddcc287;

## Sources

org.eclipse.swt.win32.win32.x86_64-3.116.100.source.jar@https://repo1.maven.org/maven2/org/eclipse/platform/org.eclipse.swt.win32.win32.x86_64/3.116.100/org.eclipse.swt.win32.win32.x86_64-3.116.100-sources.jar ?!MD5=f22f457acbae0c32ddf0455579911e4d

org.eclipse.swt.linux.x86_64-sources.jar@https://repo1.maven.org/maven2/org/eclipse/platform/org.eclipse.swt.gtk.linux.x86_64/3.122.0/org.eclipse.swt.gtk.linux.x86_64-3.122.0-sources.jar ?!MD5=fccd49c89a649a2ca2874bf236a4319dcmds

To simply call java if different versions are available on the PC,

or to call JZtxtcmd scripts without necessity of change the PATH in a Linux environmentt

you should have a cmds directory in your home location.

/home/user/cmds/ or more short ~cmds/

This is also valid for a shell script execution environment, see chapter before: For MS-Windows: Asscociate extension .sh to unix-script.bat,

see its settings of HOMEPATH and HOMEDRIVE

In this directory some script should be present and adapted to call JZtxtcmd scripts and Java programs.

Note: JZtxtcmd is frequently used for generation in emC.

This scripts are contained as template in ./src/load_tools/cmds_template to copy and adapt.

$(dirname $0)/javavishia.sh org.vishia.jztxtcmd.JZtxtcmd $1 $2 $3 $4 $5 $6 $7 $8 $9This script calls purely only the class org.vishia.jztxtcmd.JZtxtcmd with the script to execute.

## This is the start script for JZtxtcmd execution in shell scripts (usable also on MS-Windows).

## It should be copied in a directory which is referenced by the Operation system's PATH

## or it should be copied to ~/cmds/jztxtcmd.sh

## and adapt with the paths to Java and the other tools.

## All what is need to run JZtxtcmd, it is referenced here.

## The TMP variable may used by some scrips. Export it to a defined location if necessary:

## On MinGw in windows the /tmp directory seems to be the $TMP& environment variable value.

export TMP="/tmp"

## Set the variable to refer the jar files to execute JZtxtcmd and all Java

## They should be found in local distance to this file. $(dirname $0) is the directory of this file.

## Or in any of the others. The first found location is used,

export VISHIAJAR="C:/Programs/vishia/tools" ## try first absolute location for windows

if ! test -f $VISHIAJAR/vishiaBase.jar; then

export VISHIAJAR="$HOME/vishia/tools" ## home location only usable for Linux

if ! test -f $VISHIAJAR/vishiaBase.jar; then

# export VISHIAJAR="$(dirname $0)/../../tools" ## situation in a working tree in src/cmds/jztxtcmd.sh

# if ! test -f $VISHIAJAR/vishiaBase.jar; then

# export VISHIAJAR="$(dirname $0)/../vishia/tools" ## situation in ~/cmds/jztxtcmd.sh

# if ! test -f $VISHIAJAR/vishiaBase.jar; then ##Note: the java cmd line needs windows-oriented writing style also in MinGW under Windows.

echo problem tools for jar files not found

# fi

# fi

fi

fi

echo VISHIAJAR=$VISHIAJAR

## If necessary adapt the PATH for a special java version. Comment it if the standard Java installation should be used.

## Note Java does not need an installation. It runs well if it is only present in the file system.

## swt is the graphic adaption, different for Linux and Windows:

if test -d /C/Windows; then

export JAVA_HOME="/c/Programs/Java/jre1.8.0_291"

export SWTJAR="org.eclipse.swt.win32.x86_64.jar"

JAVAPATHSEP=";"

else

export JAVA_HOME="/usr/local/bin/JDK8"

export SWTJAR="org.eclipse.swt.Linux.win32.x86_64.jar"

JAVAPATHSEP=":"

fi

export PATH="$JAVA_HOME/bin$JAVAPATHSEP$PATH"

#echo PATH=$PATH

## adapt the path to the Xml-Tools. See zbnfjax-readme.

## The XML tools are necessary for some XML operations. This environment variable may be used in some JZtxtcmd scripts.

## Comment it if not used.

##export XML_TOOLS=C:\Programs\XML_Tools

## This is the invocation of JZtxtcmd, with up to 9 arguments.

echo Note: the java cmd line needs windows-oriented writing style also in MinGW under Windows.

echo java -cp $VISHIAJAR/vishiaBase.jar$JAVAPATHSEP$VISHIAJAR/vishiaGui.jar$JAVAPATHSEP$VISHIAJAR/$SWTJAR $1 $2 $3 $4 $5 $6 $7 $8 $9

java -cp $VISHIAJAR/vishiaBase.jar$JAVAPATHSEP$VISHIAJAR/vishiaGui.jar$JAVAPATHSEP$VISHIAJAR/$SWTJAR $1 $2 $3 $4 $5 $6 $7 $8 $9In the last script there are some things to adapt:

-

Location of Java is clarified

-

Location of the jar files from vihia is clarified

The content of the script can be copied from here, and adapted if necessary.

2.6. Sense or nonsense of local PATH enhancements

You can enhance the PATH locally, how it is done with this unix-script.bat start batch. The enhancement of a script variable is also valid inside the called script (for Windows inside the whole console process). That approach is known by all experts.

The other possibility is: On installation process on a special tool the installer enhances the systems settings. Then the tool runs without any scripting. This is the common way for ordinary installations.

Setting a special path into the PATH variable in a script has the advantage for more experience. You will see what is really necessary. You can choose between different tools and versions which uses the same command names (sh.exe, gcc.exe etc.)

2.7. Java JRE8 available

Java should be a standard on any PC system. For some build- and translation tools JRE8 is used. This should be checked with console command:

java --version

On Linux the tools are tested with OpenJDK Runtime 11.0.6, it runs.

If another JRE Version (Higher than 8) is used as default and it does not run, you can adapt the PATH for a JRE8, see chapter For MS-Windows: Asscociate extension .sh to unix-script.bat

set PATH=path/to/JRE8/bin;%PATH% ..enhance the PATH, firstly find JRE8

This action defines a local enhanced PATH without change the environment of the system.

2.8. gcc available

For Linux the gcc package (GNU) should be installed:

apt-get install gcc apt-get install g++

It should be familiar for Linux users which uses C/C++-Compilation.

On MS-Windows gcc is contained in Cygwin, see above.

3. Test strategies: individual and complete tests, documentation

The test of modules (units) has four aspects:

-

a: The manual step by step test to see what is done in detail, the typical developer test.

-

b: A manual running test while developing for specific situations

-

c: The nightly build test to assure, all is correct. Avoid bugs while improvement.

-

d: Tests are important to document the usage of a module / component.

The point a: is the most self-evident for the developer, one should use firstly this test aspect by himself.

The point b: is similar a: but without single-step debugging. The results of test are seen as

-

Compiler errors depending on the used compiler and environment settings, an interesting test result. A software may run for example in the IDE’s compiler but fails with an other compiler.

-

Output of test results, see chapter Test check and output in the test files

Both a: and b: works together, can be executed in parallel by selecting the same test environments see chapter Test Selection - arrange test cases

The point c: is the primary for continuous integration.

The point d: is the most important for a user of the sources. One can see how does it works by means of the test (-examples).

3.1. What is tested? C-sources, compiler optimization effects and in target hardware

Firstly the algorithm of the C-sources should be tested. It should be independent of the used compiler and there options. Hence any compiler can be used for test of the sources, for example a Visual Studio compiler, gcc or other.

Secondly, it is possible that an algorithm works proper with the selected compiler,

but fails in practice on an embedded hardware. What is faulty? It is possible for example

that the target compiler has better optimization, and a property keyword such as

volatile is missing in the source. It is a real failure in the source,

but it was not detected by the test run with lower optimization. Some other adequate mistakes may be existing in a software.

In conclusion of that, the compiler and its optimization level should be carefully set. The test should be done with more as one compiler and with different optimization levels. For nightly tests the night may be long enough.

The next question is: "Test in the real target hardware". An important answer is: "The test should not only be done in the special hardware environment, the sources should be tested in different environment situations". For example, an algorithm works properly in the special hardware environment because some data are shortened, but the algorithm is really faulty. Ergo, test it in different situations.

But the test in the real target environment, with the target compiler, running inside the real hardware platform may be the last of all tests. It can be done as integration test of course, but the modules can be tested in this manner too.

It means, the test should compile for the target platform, load the result into the target hardware, run there, get error messages for example via a serial output, but run it as module test. Because not all modules may be able to load in one binary (it would be too large), the build and test process should divide the all modules in proper portions and test one after another, or test parallel in more as one hardware board.

3.2. a: Individual Tests in an IDE with step by step debugging

There are some IDE ("Integrated Development Environment") project files:

-

IDE/MSVC/AllTest_emC_Base.sln: Visual studio -

IDE/EclCDT/emC_Test/.cproject: Eclipse CDT, import it in an Eclipse environment, see also ../../../SwEng/html/EclipseWrk.de.html. -

TODO for embedded platform

Offering special test projects for various topics has not proven successful, because the maintenance of some more projects is a too high effort. Instead, there is exactly one project for any platform (it means at least two, one for Visual Studio and one for Eclipse CDT). To test a special topic there is a main routine which’s calling statements are commented, only the interesting call is used, for single step in debug. This is simple to make.

#ifdef DEF_MAIN_emC_TestAll_testSpecialMain

int main(int nArgs, char const*const* cmdArgs )

{

STACKTRC_ROOT_ENTRY("main");

test_Exception();

test_stdArray();

//test_Alloc_ObjectJc();

test_ObjectJc();

//testString_emC();

This is a snapshot of the current situation. This main routine is used for both IDE.

The include path is IDE- and configuration-specific in the IDE. For both IDEs different path are used for the

#include <applstdef_emC.h>

This file(s) should be changed for several Variants for emC compilation. Of course any commit contains the last used situation, not a developer progress in any case.

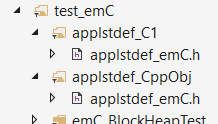

The applstdef are located in

D:\vishia\emc\Test_emC\src\test\MSVC\All_Test

1.651 AllTest_emC_Base.sln

<DIR> applstdef_C1

<DIR> applstdef_CppObj

It is for Visual Studio. The same set of files, but other files are existing for Eclipse-CDT, see project.

3.3. b: Compile and test for individual situations with gcc or another compiler maybe for the target

This may be seen as preparation for the nightly 'test all' (c) but can also be seen as an intermediate test while development.

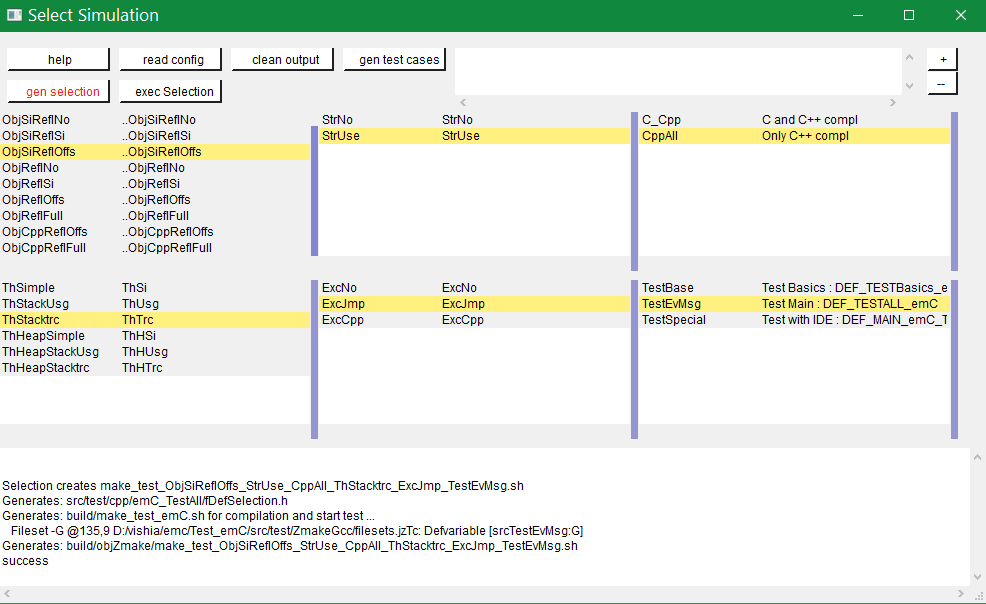

For this approach the Test Selection GUI can be used (chapter #testSelection). With the GUI the test cases and test conditions are set. On button [ gen selection ] this gui produces the necessary make..sh files and one file on src/test/cpp/emC_TestAll/fDefSelection.h which contains settings (#define) for compilation. This file is used both by the script controlled test and by the test in the GUI, for example Visual Studio. Both projects may/should include this file to have the same settings. Hence an testing error shown as test result can be explore by testing step by step in the GUI.

3.4. b, c: Generate the scripts for compile and test

In the emC test usual the familiar make approaches are not used. Why?

Standard make files with complex settings are not simple to read, write and understand. Hence a more obvious system named Zmake was established for some years (starting in the 1990th). It uses a [JZtxtcmd]-script to generate shell scripts which invokes the compilation. Such script files are the sources to determine what and how to make.

The output from a Zmake invocation is a shell.sh script which contains the compiler invocation as command line with all obvious options. The script contains the immediately real compiler invocation. It is not a make script which builds the compiler invocation internally using some dependencies, settings etc. The advantage of immediately real compiler invocation is: It is immediately documented what is happen.

To generate this compiler invocation scripts the JZtxtcmd approach is used. This is done by the Test Selection GUI (chapter #testSelection) as also by the specified test files build.sh and inside the src/test/testScripts directory.

All this scripts are short, consisting of two parts:

cd `dirname "$0"`/../../.. pwd if ! test -e build; then src/buildScripts/-mkLinkBuild.sh; fi #REM invokes JZtxtcmd as main class of vishiaBase with this file: java -jar libs/vishiaBase.jar src/test/testScripts/testBasics_Simple.jzTc.sh ##Execute the even yet generated sh scripts, compile and execute: build/testBasics_Simple.sh read -n1 -r -p "Press any key to continue..." exit 0 ##the rest of the file is the JZtxtcmd script

Above is the shell-script part, invoking JZtxtcmd (the main class of the jar) with the script file itself. After them the generated script is executed to compile and confirm the test.

It follows the JZtxtcmd script part:

==JZtxtcmd==

include ../ZmakeGcc/test_Selection.jztsh;

currdir=<:><&scriptdir>/../../..<.>;

##Map ccSet; ##Settings for compilation

##String ccSet.cc = "clang";

main() {

call genTestcases(name = "testBasics_Simple", select =

<:><: >

1=ObjSiRefl; 2=ReflSi; 3=StrNo; 4=ThSimple; 5=ExcJmp; 6=TestBase

+ 1=ObjCppAdr; 2=ReflFull; 3=StrUse; 4=ThHeapStacktrc; 5=ExcCpp; 6=TestBase

<.>); ##Generate all relevant test cases

}

It includes the common generation JZtxtcmd script and invokes in the main() the common genTestCases(…) routine (from the included script) with given specific arguments. This arguments comes from the Test Selection GUI (chapter #testSelection).

How does the common genTestCases(…) routine works? It generates texts, selects files from some Fileset etc. See the comments in this script src/test/ZmakeGcc/test_Selection.jztsh.

3.5. a,b: Test Selection - arrange test cases

The problem on testing the core emC sources is the variety of variants (yet 124 combinations) for ObjectJc, Exceptionhandling etc. Writing a lot of scripts, and adjusting the compile switches in applstdef_emC.h is a too high effort. Hence a 'Select Simulation' tool is used, which cames originally from Simulink stimuli selections written by me in the past. It is written in Java and contained in libs/vishiaGui.jar. This tool works with tables.

The same tables as for the manual operating graphic tool are used to arrange the conditions for the test cases. For example the table for Selecting the kind of reflection generation looks like (File src/test/ZmakeGcc/test_Selection.jztsh):

List tabRefl @name =

[ { name="ObjSiReflNo", descr="..ObjSiReflNo", sh="n", def1="DEF_REFLECTION_NO",

, { name="ObjSiReflSi", descr="..ObjSiReflSi", sh="i", def1="DEF_REFLECTION_SIMPLE",

, { name="ObjSiReflOffs", descr="..ObjSiReflOffs", sh="o", def1="DEF_REFLECTION_OFFS",

, { name="ObjReflNo", descr="..ObjReflNo", sh="N", def1="DEF_REFLECTION_NO" }

, { name="ObjReflSi", descr="..ObjReflSi", sh="I", def1="DEF_REFLECTION_SIMPLE" }

, { name="ObjReflOffs", descr="..ObjReflOffs", sh="O", def1="DEF_REFLECTION_OFFS" }

, { name="ObjReflFull", descr="..ObjReflFull", sh="F", def1="DEF_REFLECTION_FULL" }

, { name="ObjCppReflOffs", descr="..ObjCppReflOffs", sh="P", def1="DEF_REFLECTION_OFFS",

, { name="ObjCppReflFull", descr="..ObjCppReflFull", sh="Q", def1="DEF_REFLECTION_FULL",

, { name="ObjCppAdrReflOffs", descr="..ObjCppAdrReflOffs", sh="R", def1="DEF_REFLECTION_OFFS",

, { name="ObjCppAdrReflFull", descr="..ObjCppAdrReflFull", sh="S", def1="DEF_REFLECTION_FULL",

];

It is a data list in ../../../JZtxtcmd/html/JZtxtcmd.html. You see the magic character in the list and in the 'Select Simulation'. The table contains immediately the necessary compiler switches for each of the four test variants.

With the adequate information about the selected lines the sub routine

sub genSelection(Map line1, Map line2, ..., String testoutpath) { ...

is invoked. It gets the selected line in each table. line1 is from the table above. With the information in the line the compiler switches in the test script can be arragenged in a simple way. The texts are contained in the line.

For execution of some test cases the same information is used.

sub genTestcases(String select, String name) { ...

contains in the select String the information, which lines are used, for example in the snippet of src/test/testScripts/testBasics_Simple.jzTc.sh:

main() {

##Generate all relevant test cases

call genTestcases(name = "testBasics_Simple", select =

<:><: >

1=ObjSiReflNo; 2=StrNo; 3=CppAll; 4=ThSimple; 5=ExcJmp; 6=TestBase

+ 1=ObjCppAdrReflFull; 2=StrUse; 3=CppAll; 4=ThHeapStacktrc; 5=ExcCpp; 6=TestBase

<.>); ##Generate all relevant test cases

}

The syntax of the select String is described in ../../../smlk/html/SmlkTimeSignals/SmlkTimeSignals.html#truegenerating-manual-planned-test-cases which uses the same tool for another approach. The combination is done with the called Java routine (part of vishiaBase.jar). See ../../../Java/docuSrcJava_vishiaBase/org/vishia/testutil/TestConditionCombi.html.

3.6. Arranging the necessary compile units

The 6. table in the 'Select Simulation' contains, which is to test. (The other tables contains, 'under which condition is to test'). It looks like (shortend):

List tabTestSrc =

[ { name="TestBase", srcSet="srcTestBasics", def1="DEF_TESTBasics_emC"}

, { name="TestEvMsg", srcSet="srcTestEvMsg", def1="DEF_TESTALL_emC" }

];

The srcSet is the name of a file set, defined in the script src/test/ZmakeGcc/filesets.jzTc. It determines which files should be used, whereby a reference to further filesets are contained too:

## ## main file for Basic tests. ## Fileset srcTestBasics = ( src/test/cpp:emC_TestAll/testBasics.cpp , src/test/cpp:emC_TestAll/test_exitError.c , &srcTest_ObjectJc , &srcTest_Exception , &src_Base_emC_NumericSimple );

A Fileset is a core capability from ../../../JZtxtcmd/html/JZtxtcmd.html. It names some files and sub Filesets.

In a Fileset some files are named for some application goals. This information can be used to select which emC files are need as part of a maybe simple application:

Fileset src_Base_emC_NumericSimple = ( src/main/cpp/src_emC:emC_srcApplSpec/SimpleNumCNoExc/fw_ThreadContextSimpleIntr.c , src/main/cpp/src_emC:emC_srcApplSpec/SimpleNumCNoExc/ThreadContextSingle_emC.c , src/main/cpp/src_emC:emC_srcApplSpec/applConv/LogException_emC.c );

The fileset for the core files:

## ##The real core sources for simple applications only used ObjectJc. ##See sub build_dbgC1(), only the OSAL should be still added. ## Fileset c_src_emC_core = ( src/main/cpp/src_emC:emC/Base/Assert_emC.c , src/main/cpp/src_emC:emC/Base/MemC_emC.c , src/main/cpp/src_emC:emC/Base/StringBase_emC.c , src/main/cpp/src_emC:emC/Base/ObjectSimple_emC.c , src/main/cpp/src_emC:emC/Base/ObjectRefl_emC.c , src/main/cpp/src_emC:emC/Base/ObjectJcpp_emC.cpp , src/main/cpp/src_emC:emC/Base/Exception_emC.c , src/main/cpp/src_emC:emC/Base/ExceptionCpp_emC.cpp , src/main/cpp/src_emC:emC/Base/ExcThreadCxt_emC.c , src/main/cpp/src_emC:emC/Base/ReflectionBaseTypes_emC.c , src/main/cpp/src_emC:emC_srcApplSpec/applConv/ExceptionPrintStacktrace_emC.c ##Note: Only for test evaluation , src/main/cpp/src_emC:emC/Test/testAssert_C.c , src/main/cpp/src_emC:emC/Test/testAssert.cpp , src/test/cpp:emC_TestAll/outTestConditions.c , &src_OSALgcc , src/main/cpp/src_emC:emC_srcApplSpec/applConv/ObjectJc_allocStartup_emC.c );

are also the core sources for test. Maybe not all, but from this selection may be necessary to use as core sources for an application, which uses emC. It documents the necessities and indirectly also the dependencies.

3.7. Distinction of several variants of compilation

The distinction between C and C++ compilation can be done using either gcc for *.c-Files or g++ which always compiles as C++. This is the content of the special build_… routine. Some more build_… routines are existing for different used files and for decision between C and C++ compilation.

The distinction between conditional compilation (variants, see ../Base/Variants_emC.html are done with the different content of the cc_def variable. It contains '-D …' arguments for the compilation. The other variant may be selecting different <applstdef_emC.h> files which is recommended for user applications. Then the include path should be varied. It needs some applstdef_emC.h files. This can be done too, the part of the include path to <applstdef_emC.h> is contained in the cc_def variable.

3.8. Check dependency and rebuild capability

A file should be compiled:

-

If the object file does not exist

-

If the source file is newer than the object file (or more exactly: The content of the source file was changed in comparison to the content of the last compilation).

-

If any of the included source files (e.g. header) is newer than the object file (respectively changed after last using).

The first two conditions are checked only with the 'is newer' aspect from an ordinary make file. For the third condition (indirect newly) the dependencies between the files should be known. For a classic make files this dependencies can be given - if they are known. In practice the dependencies depends on the include situation, it is not simple. Hence the real dependencies can only detect for a concretely version of the file, and the make script should be corrected any time. IDEs use their native dependency check usual proper.

Because this cannot be done easily, often there is a 'build all' mentality.

For repeated compilation the 'build all' mentality needs to much time.

For this approach a Java package org.vishia.checkDeps_C is used. See

This tool uses a comprehensive file deps.txt which contains the dependency situation of each file and the timestamp and content situation (via CRC checksum). The tool checks the time stamp and the content of all depending files from the list. If one file is changed, it is parsed by content, find out include statements and build newly the dependencies from this level. Ones of course the object should be recompiled, because another content may be changed. Secondly the dependencies for the test later are corrected..

Because the dependency file contains the time stamp of any source file, it is detected whether an older file is given. The comparison of time stamps is not the comparison between source and object, it is the comparison between the last used source and the current source time stamp. The newly compilation is done also if the file is older, not only newer than the object file. This is an expectable situation, if a file is changed by checkout from a file repositiory with its originally time stamp (the older one). Because git and some other Unix/linux tools stores an older file with the current timestamp this problem is not present on linux, but Windows restores or preserves the time stamp of a copied file, which may be the better and here supported approach.

If the dependency file is not existing, it means, the dependencies should be detected, build all is necessary and the dependency file is built. This is the situation on first invocation after clean.

The dependency file is stored inside the object directory:

...\build\objZmake\test_ObjRefl_ReflFull_ThSi_ExcNo_StrNo_TestEvMsg

<DIR> emC

<DIR> emC_Exmpl_Ctrl

<DIR> emC_srcApplSpec

<DIR> emC_srcOSALspec

<DIR> emC_TestAll

<DIR> emC_Test_Container

<DIR> emC_Test_Ctrl

<DIR> emC_Test_C_Cpp

<DIR> emC_Test_ObjectJc

<DIR> emC_Test_Stacktrc_Exc

362.272 deps.txt <<=======

8.330 checkDeps.out

295.817 emCBase_.test.exe

296 fDefSelection.h

0 ld_out.txt

It is a snapshot from the root of the object dir tree. The deps.txt has about 260 kByte, it is not too long. The Java algorithm to check the dependencies of all files reading this file needs only milliseconds, because like known, Java is very fast. It runs of course also in Linux.

You can view this file to explore the individual dependencies of each file, which may be informative.

The dependency check is part of each make..sh shell script (generated):

...\build\objZmake

2.965 deps_test_ObjRefl_ReflFull_ThSi_ExcNo_StrNo_TestEvMsg.args

72.677 make_test_ObjRefl_ReflFull_ThSi_ExcNo_StrNo_TestEvMsg.sh

<DIR> test_ObjRefl_ReflFull_ThSi_ExcNo_StrNo_TestEvMsg

.... echo run checkDeps, see output in build/...testCase/checkDeps.out java -cp libs/vishiaBase.jar org.vishia.checkDeps_C.CheckDeps ... ... --@build/objZmake/deps_test_ObjRefl_ReflFull_ThSi_ExcNo_StrNo_TestEvMsg.args ... ... > build/objZmake/test_ObjRefl_ReflFull_ThSi_ExcNo_StrNo_TestEvMsg/checkDeps.out

( The java invocation is a long line).

The check of the unchanged situation does only need reading the time stamps of all depending files, it is very fast because the file system is usual cached.

If dependencies should be evaluate newly all source files are parsed. Of course already parsed included files are not proceeded twice. The parsing, and checking for # include statement, does only need a short time because Java is fast. The gcc compiler itself supports a dependency check too, but that is very slower (not because C++ is slow, but because it may be more complex). The checkDeps dependency check is more simple, for example it does not regard conditional compilation (a conditional include). It means, it detects a dependency to a included file which is not active in the compiling situation. But that is not a disadvantage, because the dependency can be exist, and the unnecessary compilation because of one conditional include does not need more time than the elaborately dependency check.

If the object file should be recompiled, the checkDeps algorithm deletes it and forces a recompilation because existence check of the object file before compilation. It is a simple liaison between this independent tools.

4. General approaches for testing of sources on a PC platform with evalable text output

The test has gotten some ideas from the Google test framework (see https://chromium.googlesource.com/external/github.com/pwnall/googletest/+/refs/tags/release-1.8.0/googletest/docs/Primer.md) but is more easier.

The primary goal is, control a proper test output to a file while the program is running. This needs organization.

All (very less) sources are contained in emC/Test/*, especially emC/Test/testAssert.h.

For test any operation (C-function) can be used. It is not necessary to mark it as "TEST" operation. Usual you have a main() and in the main() you invoke some operations one after another, which executes some tests.

4.1. Frame for test

Inside an operation the begin and the end of the test is marked with:

int anyOperation(int mayhaveArgs) {

STACKTRC_ENTRY("anyOperation");

TEST_TRY("Description of the test case") {

//

//... any stuff to test

//

}_TEST_TRY_END

STACKTRC_RETURN;

}

The macros STACKTRC_… are necessary for exception messaging with stacktrace-report, see ../Base/ThCxtExc_emC.html.

The TEST_TRY(…) macro declares a bTESTok variable and notice the start. For PC usage this macro is defined as

//in source: src/src_emC/cpp/emC/Test/testAssert.h

#define TEST_TRY(MSG) bool bTESTok = true; \

TRY { msgStartFileLine_testAssert_emC(MSG, __FILE__, __LINE__);

The compiler intrinsic FILE and LINE writes the file and line information in the test output, important for tracking of test results in the sources.

The _TEST_TRY_END checks the bTESTok variable and writes an proper message or stores any data information for the test result. It contains also the exception handling for unexpected exceptions while test. For PC usage this macro is defined as

//in source: src/src_emC/cpp/emC/Test/testAssert.h

#define TEST_TRY_END } _TRY CATCH(Exception, exc) { \

bTESTok = false; \

exceptionFileLine_testAssert_emC(exc, __FILE__, __LINE__); \

} END_TRY msgEndFileLine_testAssert_emC(bTESTok);

The same can done also manually, with more writing overhead, but maybe better obviously what’s happen:

int anyOperation(int mayhaveArgs) {

STACKTRC_ENTRY("anyOperation");

TEST_START("test_ctor_ObjectJc");

TRY {

//

//... any stuff to test

//

}_TRY;

CATCH(Exception, exc) {

TEST_EXC(exc);

}

END_TRY;

TEST_END;

STACKTRC_RETURN;

}

The adequate macros are defined as:

//in source: src/src_emC/cpp/emC/Test/testAssert.h #define TEST_START(MSG) bool bTESTok = true; \ msgStartFileLine_testAssert_emC(MSG, __FILE__, __LINE__) #define TEST_EXC(EXC) bTESTok = false; \ exceptionFileLine_testAssert_emC(EXC, __FILE__, __LINE__) #define TEST_END msgEndFileLine_testAssert_emC(bTESTok);

That is all for the test frame.

Of course selecting some test cases with a specific tool (gradle tests or such) is not possible. But there is a normal programming for test cases. Selection of specific test cases can be done with ordinary conditional compiling. It doesn’t need special knowledge.

4.2. Exceptions on test

For exceptions see ../Base/ThCxtExc_emC.html.

The test should be done under Exception control. On PC platform this should not be an problem.

Either the C++ try-catch can be used, or the other possibilities.

But it is the same as elsewhere setted in the <applstdef_emC.h>.

Hence: If complicated algorithm are tested, the exception handling should be activated.

Only on test of the exception handling itself, it would be set to DEF_NO_Exception_emC

or DEF_NO_THCXT_STACKTRC_EXC_emC which disables the exceptions.

This TRY-CATCH frame does only except if an exception is not catched inside the tested algorithm. For a catched exception it is part of the algorithm itself.

If an unexpected exception occurs during the test, then the msgEndFileLine_testAssert_emC(…) routine is not reached, instead the exceptionFileLine_testAssert_emC(…) routine is invoked. It writes the exception with file and line and if possible (depending on setting of DEF_ThreadContext_STACKTRC) it writes the stack trace to evaluate where and why the exception was occurring.

4.3. Execute tests

The tests itself checks and logs results. For example the test in emc_Test_ObjectJc/test_ObjectJc.c:

bool bOk = CHECKstrict_ObjectJc(&data->base.obj

, sizeof(myData1const), refl_MyType_Test_ObjectJc, 0);

CHECK_TRUE(bOk, "checkStrict_ObjectJc with refl and without instance id.")

Any boolean test result condition is built. Then the CHECK_TRUE with the test result is invoked, with a text which describes the positive expected result. The CHECK_TRUE produces only an output if the check is false:

ERROR: checkStrict_ObjectJc with refl and without instance id.

(@38: emC_Test_ObjectJc\test_ObjectJc.c)

in one line. Instead using TEST_TRUE outputs also the positive test:

ok: checkStrict_ObjectJc with refl and without instance id.

This documents which tests are done. The output is valid for PC test or for test on an embedded platform with text output capability.

The test can also output values, in a printf manner. For example in emC_Test_Math/Test_Math_emC.c the results of mathematics are checked:

TEST_TRUE(rs == (int32)values[ix].rs

, "muls16_emC %4.4X * %4.4X => %8.8X"

, (int)(as) & 0xffff, (int)bs & 0xffff, rs);

It outputs for example:

ok: muls16_emC 4000 * FFFF => FFFFC000 ....

and informs about numeric test result, here for a fix point multiplication. The test is important because the fix point multiplication can fail on an embedded platform if register widths etc. are other as expected. Maybe using CHECK_TRUE may be more proper, information only on faulty results. The calculated faulty value can be used to detect the reason of the fault.

Furthermore the text can be prepared and reused for more test outputs. See the example testing a smoothing control block (T1) in test1_T1_Ctrl_emC() (src/test/cpp/emC_Test_Ctrl/test_T1i_Ctrl.c) the output of the control block for a step response is compared with the known mathematic result. The maximum of the abbreviation (error) is built in a variable dysh1_eMax. A small abbreviation is expectable because the T1 FBlock has only integer accuracy with a defined step width. It means in comparison to the exact mathematic behaviour not in the step time a small difference is expected and admissible. Hence the test is written as

CHECK_TRUE(dysh1_eMax < thiz->abbrTsh1, checktext[0]);

The abbreviation is compared with a given value. Because some other tests are done too, the text is stored in an array which is used for more tests. Example:

char checktext[8][100]; snprintf(checktext[0], 100, "%1.3f < abbreviation of Tsh1 4 Bit fTs", thiz->abbrTsh1); snprintf(checktext[1], 100, "%1.3f < abbreviation of Tsh2 8 Bit fTs", thiz->abbrTsh2); ....

You see, the functionality what and which to test is complex due to the technical approach. But the building of the test result is very simple. The CHECK_TRUE(COND, TEXT,…) macro expects only a false or true as first argument, TEXT as second arguemnt which describes the expected behavior, and maybe some more variable arguments.

This test output macros are defined as:

//in source: src/src_emC/cpp/emC/Test/testAssert.h

/**Test, output ok MSG if ok, only on error with file and line. */

#define TEST_TRUE(COND, MSG, ...) \

if(!expectMsgFileLine_testAssert_emC(COND, MSG, __FILE__, __LINE__, ##__VA_ARGS__)) bTESTok = false;

/**Checks only, output only if error*/

#define CHECK_TRUE(COND, MSG, ...) \

if(!(COND)) { expectMsgFileLine_testAssert_emC(false, MSG, __FILE__, __LINE__, ##__VA_ARGS__); bTESTok = false; }

The difference between TEST… and CHECK… is only the conditional call

of the inner operation expectMsgFileLine_testAssert_emC on CHECK…,

hence nothing is outputted on true.

The known C macro `VA_ARGS`is used to forward the variable arguments to the macro expansion. The operation for test output itself is defined as:

//in source: src/src_emC/cpp/emC/Test/testAssert_C.c

bool expectMsgFileLine_testAssert_emC ( bool cond, char const* msg, char const* file, int line, ...) {

char text[200];

va_list args;

va_start(args, line);

vsnprintf(text, sizeof(text), msg, args); //prepare the positive text maybe with variable args

va_end(args);

if(cond) { printf(" ok: %s\n", text ); }

else {

char const* filename = dirFile(file);

printf(" ERROR: %s (@%d: %s)\n", text, line, filename);

}

return cond;

}

As you see, not too complicated, using the known variable arguments in C programming

together with the vsnprintf(…) as save variant of the traditional known sprintf(…).

4.4. The test output

For test on PC or test on a text supporting embedded platform a text output is created.

With distinguish TEST_TRUE and CHECK_TRUE one can add more information about executed tests. A test output with some executed tests looks like:

Test: test_ctor_ObjectJc: (emC_Test_ObjectJc/test_ObjectJc.c @ 89) ... ok: refl type is ok ok: INIZ_VAL_MyType_Test_ObjectJc ok: checkStrict_ObjectJc ok

You see the test case starts with Test: … left aligned, and ok after the test is also written left aligned as the finish line. Between them some messages ` ok: …` which documents which tests are executed, or ` ERROR: …` if a test fails:

Test: test_ctor_ObjectJc: (emC_Test_ObjectJc\test_ObjectJc.c @ 89) ... ok: refl type is ok ERROR: INIZ_VAL_MyType_Test_ObjectJc (@99: emC_Test_ObjectJc\test_ObjectJc.c) ok: checkStrict_ObjectJc ERROR

The simple form looks like:

Test: test_cos16: (emC_Test_Math\Test_Math_emC.c @ 228) ... ok

If during processing the test algorithm an exception is thrown, then (using the TEST_TRY(…) macros) this test is aborted with an error message:

Test: test_ObjectJcpp_Base: (emC_Test_ObjectJc\test_ObjectJcpp.cpp @ 109) ... EXCEPTION 1 (24, 0) @21: ..\..\src\test\cpp\emC_Test_ObjectJc\test_ObjectJcpp.cpp RuntimeException: : 24=0x00000018 at THROW (..\..\src\test\cpp\emC_Test_ObjectJc\test_ObjectJcpp.cpp:21) at test_ObjectJcpp_Base (..\..\src\test\cpp\emC_Test_ObjectJc\test_ObjectJcpp.cpp:109) at test_ObjectJcpp (..\..\src\test\cpp\emC_Test_ObjectJc\test_ObjectJcpp.cpp:214) at main (..\..\src\test\cpp\emC_TestAll\testBasics.cpp:21) ERROR

4.5. Unexpected exceptions while test

This exception in the chapter above had occurred because the macro for INIZsuper_ClassJc(…) was faulty, not all elements are initialized. The type is tested inside a C++ constructor outside of the test itself, and that causes the exception. For that case the test is finished with the EXCEPTION … line, the other tests of this block are not executed. That is fatal.

In addition, the stack trace is output. With that the source of the exception was able to found without elaborately debug tests: In line 21 an ASSERT_emC(…) was found, which checks the base type. Setting a breakpoint there (Visual Studio) shows, the information about the ClassJc…superClass was missing, which was caused by the faulty macro for the initialization.

It is also possible to write `TRY { …. }_TRY CATCH { …. } ` statements inside the test, to catch an exception in the test algorithm. Then the CATCH block should contain:

CATCH(Exception, exc) {

TEST_EXC(exc);

}

This logs the not expected exception for the test output. But if the exception behaviour is tested itself as test case, it can be written (see emC_Test_Stacktrc_Exc/TestException.cpp):

TRY{

//raise(SIGSEGV);

CALLINE; float val = testThrow(thiz, 10, 2.0f, _thCxt);

printf("val=%f\n", val);

}_TRY

CATCH(Exception, exc) {

CHECK_TRUE(exc->line == 46, "faulty line for THROW");

bHasCatched = true;

}

FINALLY {

bHasFinally = true;

} END_TRY;

TEST_TRUE(bHasCatched && bHasFinally, "simple THROW is catched. ");

In the CATCH clause a test can also confirm, but to check whether the CATCH and also here FINALLY has entered, a boolean variable is set and test after the TRY block.

The evaluation can be done with a not sophisticated text analyse, see ../TestOrg/testStrategie_en.html#trueview-of-test-results

4.6. Evaluation of textual test outputs

Each test for emC has two stages of test execution:

-

Compilation and linking, successfully or not, errors and warnings

-

if the first one is succeeded, the textual result of the tests.

It means in this case three files where outputted:

-

testcase.cc_errwith the compiler and linker error messages and warnings -

testcase.outwith the test results while running.

The testcase.out is produces as empty file (1 space) if the test does not run.

Additonally

-

testcase.errwith commonly error messages and the#define …of the test cases

is produces.

4.6.1. Manual view of test results

Now you can visit in this folder to all this files (sorted by extension). If the file length is 0, then the compilation is ok and without warnings.

Checking the test results is more complicated. You should search the word ERROR

on start of line. Inside the text a message can contain ERROR, may be. That is costly.

4.6.2. Evaluation of all files

If it is expected that most of tests are correct, you can find out the less number of faulty tests and look what’s happen. But often a systematically error is given, and a raw of tests failes because of the same error. Now an overview which tests are failed is nice, and thinking about equal conditions is necessary.

Thats why a test evaluation, which only counts the number of bad tests, is not sufficient.

The script file

src/test/testScripts/evalTests.jztsh

which calls an operation of the Java class: org.vishia.testutil.EvalTestoutfiles

checks all cc_err file and the out file in a given directory (build/result folder).

In the script it is iterated through all tables of the test cases (SimSelector),

the relevant file names from the test case name are gathered.

The file names are build from the tables, the name entry.

The Java operation then checks the content of the files and build only one character per test case.

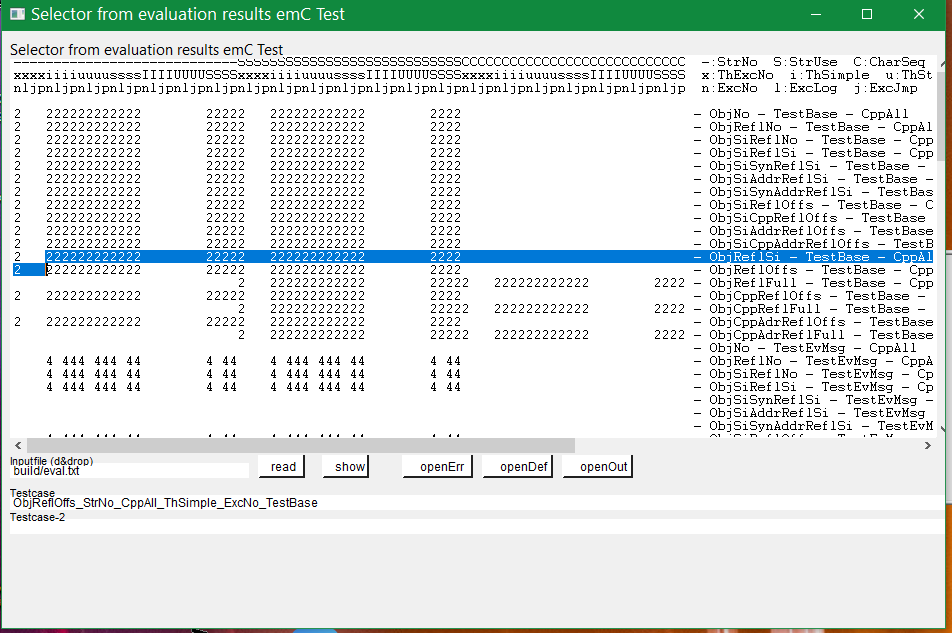

With this character and an overview description which cases, an output file is written as simple text file in a matrix, one character per file. With them, for example 72 * 36 = 2592 results are able to present in a few (39 with header) lines. Using a 'well presented' html output file with red, yellow and green lights is possible for that tool also, of course. But the simple text file is more compact for a fast overview.

-

An non existing file, not planned test, is a space, nothing visible.

-

E: If no out file was found but the cc_err file, there are compiler errors. -

X: If the output file contains more as 2 errors, it means the test isproblematic -

x: If the output file contains 1 or 2 errors, somewhat is faulty. -

v: If there are test cases without "Ok" but also without errors, the test organization faults. -

W: asXbut also Warnings are in the cc_err file -

w: asxbut also Warnings are in the cc_err file -

u: asvbut also Warnings are in the cc_err file -

F: internal Failure in evaluation -

a..j: Test is ok as0..9but there are warnings -

0..9: Test ok. The digit is (number of test cases + 9)/10, it means1for 1..10 test cases per test,3for 21..30 test cases per test,9for more as 81 test cases per test. With this number an estimation can be done how comprehensive is the test.

It means the successfully test should be marked with 0..9. Spaces documents not tested variants.

It looks like (shorten):

------------------------------------SSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSS -:StrNo S:StrUse

iiiiiiuuuuuussssssIIIIIIUUUUUUSSSSSSiiiiiiuuuuuussssssIIIIIIUUUUUUSSSSSS i:ThSimple u:ThStackUs

nnjjppnnjjppnnjjppnnjjppnnjjppnnjjppnnjjppnnjjppnnjjppnnjjppnnjjppnnjjpp n:ExcNo j:ExcJmp p:Ex

CpCpCpCpCpCpCpCpCpCpCpCpCpCpCpCpCpCpCpCpCpCpCpCpCpCpCpCpCpCpCpCpCpCpCpCp C:C_Cpp p:CppAll

1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 - ObjSiReflNo - - TestB

1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 - ObjSiReflSi - - TestB

1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 - ObjSiReflOffs - - Tes

1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 - ObjReflNo - - TestBas

1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 - ObjReflSi - - TestBas

1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 - ObjReflOffs - - TestB

- ObjReflFull - - TestB

1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 - ObjCppReflOffs - - Te

1 1 1 1 1 1 1 1 1 1 1 1 - ObjCppReflFull - - Te

1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 - ObjCppAdrReflOffs - -

1 1 1 1 1 1 1 1 1 1 1 1 - ObjCppAdrReflFull - -

3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 - ObjSiReflNo - - TestE

3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 - ObjSiReflSi - - TestE

3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 - ObjSiReflOffs - - Tes

3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 - ObjReflNo - - TestEvM

3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 - ObjReflSi - - TestEvM

3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 - ObjReflOffs - - TestE

- ObjReflFull - - TestE

3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 - ObjCppReflOffs - - Te

3 3 3 3 3 3 3 3 3 3 3 3 - ObjCppReflFull - - Te

3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 - ObjCppAdrReflOffs - -

3 3 3 3 3 3 3 3 3 3 3 3 - ObjCppAdrReflFull - -

- ObjSiReflNo - - TestS

- ObjSiReflSi - - TestS

In this example the C compilation is not done, all files are compiled with C++. Hence the column for c is empty. The tests with DEF_ThreadContext_HEAP_emC but not with DEF_ThreadContext_STACKTRC are also missed, may be unnecessary because it was tested without DEF_ThreadContext_STACKTRC. All tests with DEF_REFLECTION_FULL without String capability are not meaningfull. That are the spaces here.

4.6.3. Selection in the evaluation file, open and reconstruct faults

Now another tool can be used, also controlled by the JZtxtcmd file: